A/B testing is one of the most powerful tools for unlocking revenue growth without increasing ad spend. It gives you concrete answers to critical questions: Which design drives more purchases? Which copy persuades more users? Which layout removes friction from checkout?

At WIRO, we use structured experimentation to remove guesswork, validate ideas, and scale winning experiences across our clients’ stores. Here’s how.

Understanding A/B Testing

In today’s eCommerce landscape, it’s crucial to optimise your website, landing pages, and for better conversion rates. A/B testing or split testing is the practice of comparing two versions of a webpage, design element, or user flow to see which performs and resonates better with your target audience.

One group of visitors sees Version A (control). Another sees Version B (variation). After enough traffic and time, you analyse which version achieved higher conversions or sales with data.

In eCommerce, A/B testing can be applied to:

- Product page layouts

- Calls-to-action (CTA) design

- Checkout flow optimisations

- Navigation menus and filters

- Promotional copy and offers

- Upsell or cross-sell modules

It replaces subjective opinions with real behavioural data.

Benefits of A/B Testing in eCommerce

A/B testing can be a game-changer when it’s comes to improving website performance. If done well, A/B testing becomes a growth engine. It allows brands to:

- Make decisions with confidence: No more gut feelings, make better decisions with data insights.

- Increase conversion rates: Improve UX bottlenecks where customers drop off.

- Maximise ROI on development: Test ideas before you roll them out fully.

- Reduce business risk: Validate assumptions before committing budget.

- Drive continuous improvement: Each win stacks on top of the last.

- Better User Experience: Allows businesses to test and improve their website’s functionality and usability, thus improving customer satisfaction.

Setting Up a Successful A/B Test

At WIRO, our approach is disciplined and repeatable. Every test follows a clear process:

- Research & Audit :We use analytics, session recordings, heatmaps, and UX audits to uncover friction points.

- Hypothesis Development: Based on your research, generate a hypothesis that you want to test. Each test starts with a clear statement: “We believe that changing X will improve Y because Z.”

- Prioritisation: Ideas are ranked by potential impact, effort, and confidence using frameworks like ICE or PIE.

- Design & Development: Our UX and development teams build two or more variants while ensuring performance and brand consistency.

- Experimentation: We run controlled experiments using industry-standard tools, ensuring statistical significance.

- Analysis & Rollout: Once the analysis is complete, we measure uplift, validate results, and deploy winning variants sitewide.

WIRO’s A/B Testing Case Studies

Here’s how this framework has translated into real-world results.

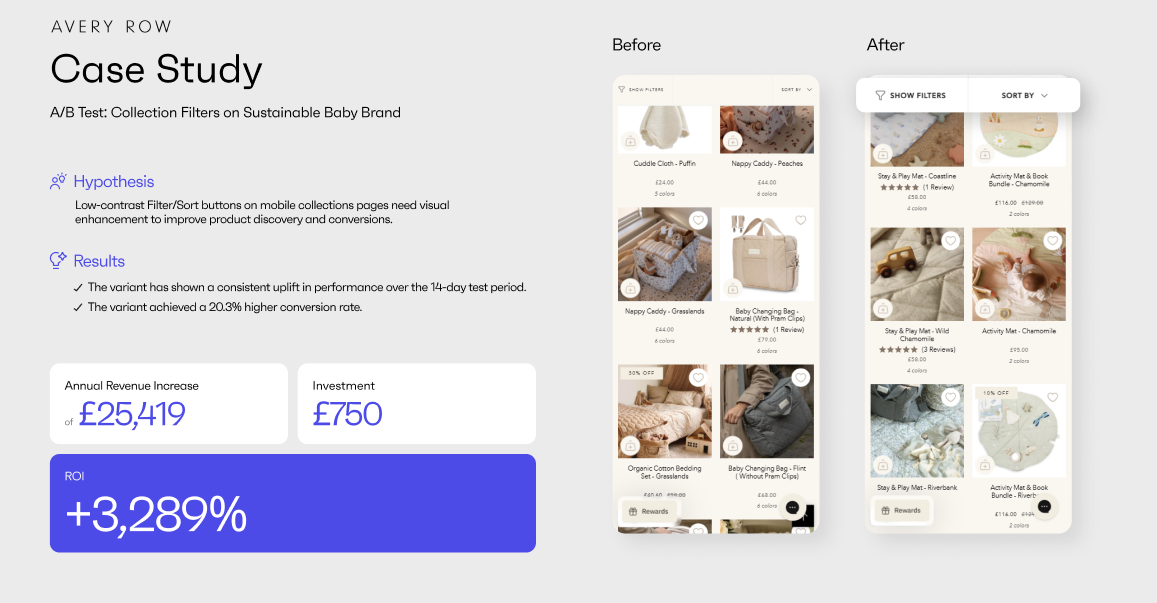

Avery Row - Boosting Mobile Product Discovery

The challenge:

On mobile, Avery Row’s filter and sort buttons were low-contrast and easily missed. Customers struggled to navigate large collections, limiting product discovery.

The test:

We designed a variation with enhanced, high-visibility filter and sort buttons and ran an A/B test over 14 days.

The results:

- +20.3% conversion rate

- £25,419 annual revenue increase

- £750 investment

- ROI: +3,289%

A minor visual tweak delivered a major conversion lift.

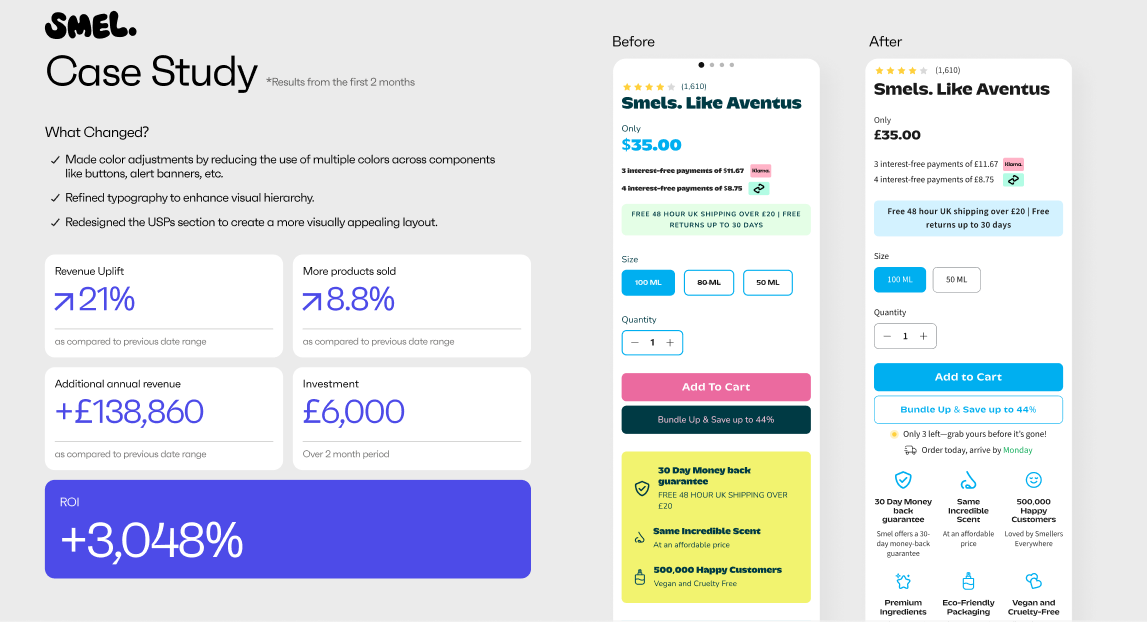

Smel - Increasing Conversion with Better Visual Hierarchy

The challenge:

Visual clutter was distracting users and slowing their path to purchase. CTAs and alert banners were overly colourful, and typography lacked hierarchy.

The test:

We created a cleaner variant by:

- Reducing colour overload on CTAs and banners

- Refining typography hierarchy

- Redesigning the USP block to be more visually clear

The results:

- +21% revenue uplift

- +8.8% more products sold

- £138,860 projected additional annual revenue

- £6,000 investment

- ROI: +3,048% in 2 months

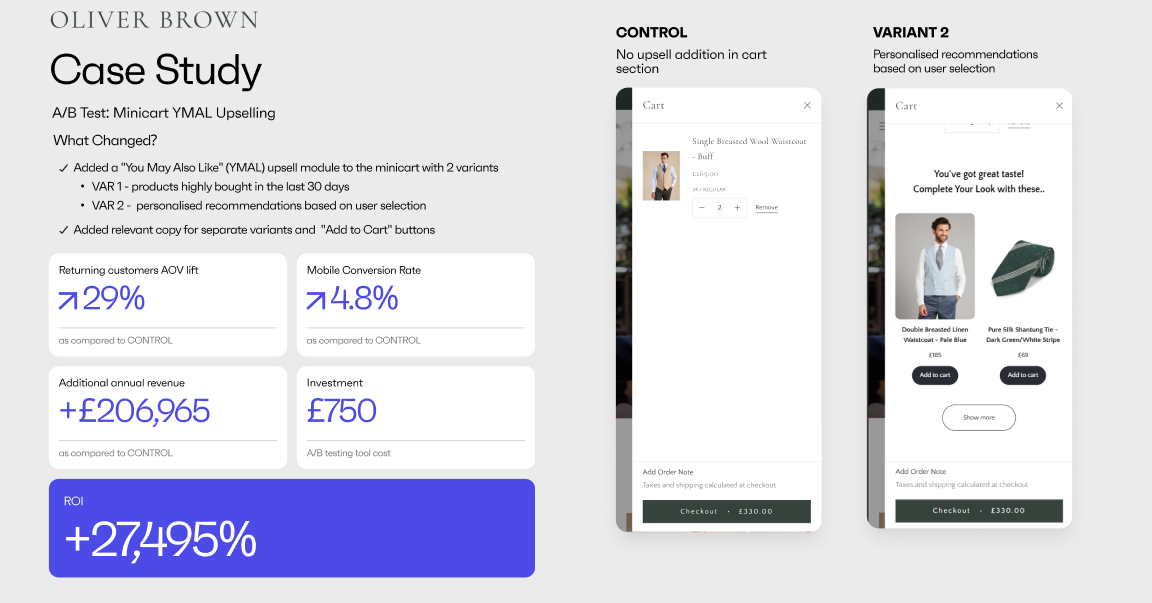

Oliver Brown - Driving AOV with Personalised Minicart Upsells

The challenge:

Oliver Brown had strong traffic but low average order values, especially on mobile.

The test:

We introduced “You May Also Like” upsell modules in the minicart and tested two variants:

- Top-selling products

- Personalised recommendations

- Both featured persuasive copy and prominent add-to-cart CTAs.

The results:

- +29% AOV among returning customers

- +14.8% mobile conversion rate

- £206,965 additional annual revenue

- £750 investment

- ROI: +27,495%

Conclusion

A/B testing is not about changing button colours, but powerful tool for driving business growth through data-driven decision making.

WIRO’s structured, data-led experimentation approach helps brands uncover what really works, roll it out fast, and turn performance optimisation into profit and drive business forward.

Ready to Unlock Growth with A/B Testing?

Partner with WIRO’s CRO specialists and turn experimentation into a growth engine.

.svg)